Data Analytics used to be a buzzword. It no longer is. It is now a standard business requirement. Over the past decade we have gone from asking “Does your business need data analytics?”, to “How can data analytics transform your business?” Simply put, analytics has become essential to the growth and development of the modern organisation. Today, businesses of all sizes can employ analytics to gather insights on the health of processes, and to identify opportunities for improvement and expansion. But first, before we discuss how analytics can add value to a business, let’s define the term “data analytics”.

A different definition

The word “analytics” has been in regular use for a few decades now, however, it can be traced back many centuries to the Greek “ἀναλυτικά” (Latin “analyticvs”), which means “the science of analysis”. In this age, we define analytics simply as “the method of logical analysis”.

Today, the word most commonly coincides with the term “data analytics”, which refers to the process, or a set of processes, for collecting, storing, processing, and interpreting data. This is the most common definition, and it has evolved over the years, depending on the technological capability of that time. It is, in my opinion, quite reductive. I, however, would prefer to expand that definition…

Data analytics is a framework for organisations to acquire, store, process, and interpret data, for the purpose of analysing incidents, gathering insights, identifying patterns, predicting events, and reporting relevant business metrics for further action.

A framework?

You read that right. There’s a number of definitions for “data analytics” available over the internet. Most will define data analytics as a process, and they are all right. These definitions have mostly come from industry leaders – organisations and people – who have invested heavily and worked in the field of data science for decades, so there is no denying their “correctness”. However, I believe they diminish the modern analytics process down to simply “analysing” data.

I like to think of data analytics as a framework over which businesses can logically build their analytical capability, by methodically developing systems for addressing each step of the data lifecycle, starting from data generation and acquisition to data archiving.

Why redefine what isn’t wrong?

When we think of data analytics as a process, we are more likely to focus on the “analysis” portion of analytics. Data analytics, however, is a lot more than analysis of data. It involves many steps, or rather sub-processes, leading up to analysis, and a few more following it. Each of these sub-processes requires careful considerations and implementation that can affect the quality and outcome of every other step in the overall analytics workflow.

Not only that, thinking of data analytics as a process leads many to believe that analytics is a linear exercise; that it starts with gathering data, and ends with visualising the results of analysis. This is quite far from real-world scenarios. In most projects, data moves from one sub-process to another, often branching here, and converging there, or returning to a prior sub-process, or jumping a few sub-process. How data moves through a data analytics system will depend on a number of business rules and requirements.

Data analytics is a framework for organisations to acquire, store, process, and interpret data, for the purpose of analysing incidents, gathering insights, identifying patterns, predicting events, and reporting relevant business metrics for further action.

Looking at data analytics as a framework encourages us to acknowledge the various sub-processes within the analytics workflow, and how they interact with each other. It also allows us to take into account how each of these sub-processes may share resources with different business systems outside of the workflow. Or technical and business teams could plan how existing resources which are not currently used for analytics can be integrated into a workflow.

So rather than picturing data analytics as a bidirectional numberline, you could think of it as a two-dimensional plane that overlaps with other two dimensional planes in certain areas, and intersects with them in other places.

Parts to a Whole

Moving beyond definitions and arguments, let’s look at the different sub-processes that make data analytics possible. While real-world applications and implementations of data analytics can look vastly different, they almost always include the following components:

- Data Acquisition & Source Integration

- Data Storage & Warehousing

- Data Cleaning & Preliminary Analysis

- Feature Engineering

- Statistical Analysis & Machine Learning

- Data Visualisation & Reporting

- Data Archiving

All of these sub-processes contribute towards the efficiency of your analytics workflow, which is why a data analytics project must include specialists with an understanding of the whole. Decisions made for and at each segment could either derail or simplify the end result.

Data Acquisition & Source Integration

No matter the size of your business, there are always operations that generate relevant data. There will also be operations that access and process data originating outside of your business. The goal of data analytics is to make sense of this data, understand how your business is performing, and finally improve business operations. A data analytics workflow must accommodate systems that can record data generated across different teams and activities. This includes data generated by the analytics workflow itself. An analytics workflow must also include tools that can integrate data sources which are external to your business.

Data Storage & Warehousing

Once your business has identified valid sources of data, and found ways to access these sources, it needs to find ways to store this data in a manner that is manageable and effective over the long term. A whole lot of data generated by business processes are “raw” in nature. This data needs to be organised so that it can be easily and repetitively accessed and processed. It needs to become meaningful. A business’ data storage strategy can affect their analytics capability. Poorly developed storage systems can slow down the analytics workflow and unnecessarily increase operational expenditure. An efficient data storage strategy will take into account the volume of data generated and the velocity at which the data is generated. It will also manage data backups for disaster management and recovery.

Within the purview of data analytics there is also data warehousing – data storage that is specifically maintained for analytics. More on this in future posts.

Data Cleaning & Preliminary Analysis

While the data storage process brings order and structure to the data ingested from multiple sources, it is not always ready for the purpose of analytics. Data, especially textual data, can often have issues such as spelling mistakes, special characters, whitespaces, etc. which can undermine the results of analytics. Data ingestion can also face errors related to date formats, rounding of numerical values, truncation of text strings, etc. All of these need to be handled either when the data is being ingested into storage, or right after it has been ingested. At this stage, it is also important to run some preliminary analysis on the data. This helps understand how the data is distributed (particularly when the end-goal involves statistical analysis), and how much of the data is either redundant, missing, or simply irrelevant, and if more cleaning is required.

It is quite common for these first three sub-processes – data acquisition & source integration, data storage & warehousing, and data cleaning & preliminary analysis – to be implemented as one unified process within the analytics workflow.

The following three – feature engineering, statistical analysis & machine learning, and data visualisation & reporting – constitute the “analysis” portion of a data analytics workflow.

Feature Engineering

In many cases data that has been cleaned is good enough for statistical analysis, reporting, and visualisation; however, it is not always optimal. Businesses might want to extrapolate more information from the cleaned data, such that this information makes it easier to filter data reports or explain trends and historical events. Basically, existing data can be manipulated to create new “features” and “attributes” that have more value than the cleaned data from which it was created. In fact, data analytics and machine learning systems rely heavily on feature engineering processes. Efficient feature engineering can greatly enrich the quality of your business’ reports and forecasts.

Statistical Analysis & Machine Learning

Analytical systems will frequently employ statistical methods for analysing data and identifying features that are relevant to the business questions that need answering. They also help determine which features in the data have strong predictive power and must be used in machine learning algorithms. A good portion of data analytics is oriented towards forecasting how business processes will perform and what preemptive steps are required to ensure business continuity. For this reason data analytics teams often have at least one data scientist and one machine learning engineer who can hypothesize and test the business hypothesis by using statistical methods.

Data Visualisation & Reporting

The results of analysis (variances, correlations, scores, etc.) can be quite difficult to read. Business stakeholders with the power to make decisions are not always acquainted with the technical knowledge required to make sense of statistical analysis. To accommodate such an audience, it is necessary that the results are presented as reports (verbose and visual) that drive the message home. An analytics workflow will consist of at least one dashboard or report, depending on the business requirement. The development of dashboards and reporting solutions can be quite cumbersome, and is impacted by the underlying data storage (warehouse architecture, data models, etc.) and so great care must be taken to ensure that your storage solution is not overcomplicated.

Data Archiving

A much overlooked process within data analytics projects, data archiving – not to be mistaken with data backup – can quicken the response times of your analytics solutions. By archiving data that is no longer in frequent use, considered “offline”, we take them out of operational use, leaving behind aggregations and snapshots instead which do not need to be re-processed along with newer operational data. Reduced processing and archived storage often translates to reduced operational expense. Decisions about data archiving need to be made early in the analytics workflow development, and must be covered alongside the storage solution discussed earlier.

Not so simple

As mentioned earlier, there is the ever-lingering temptation to regard data analytics as a simple end-to-end pipeline. It seldom works so simply. Data analytics workflows, given the business requirement, can be quite complex. There are processes and considerations we have not covered in the preceding sections of this post, but the ones mentioned are the typical building blocks of an analytics workflow. When developing an analytics solution teams have also to consider aspects such as data security and data compliance standards of local governments where servers are hosted.

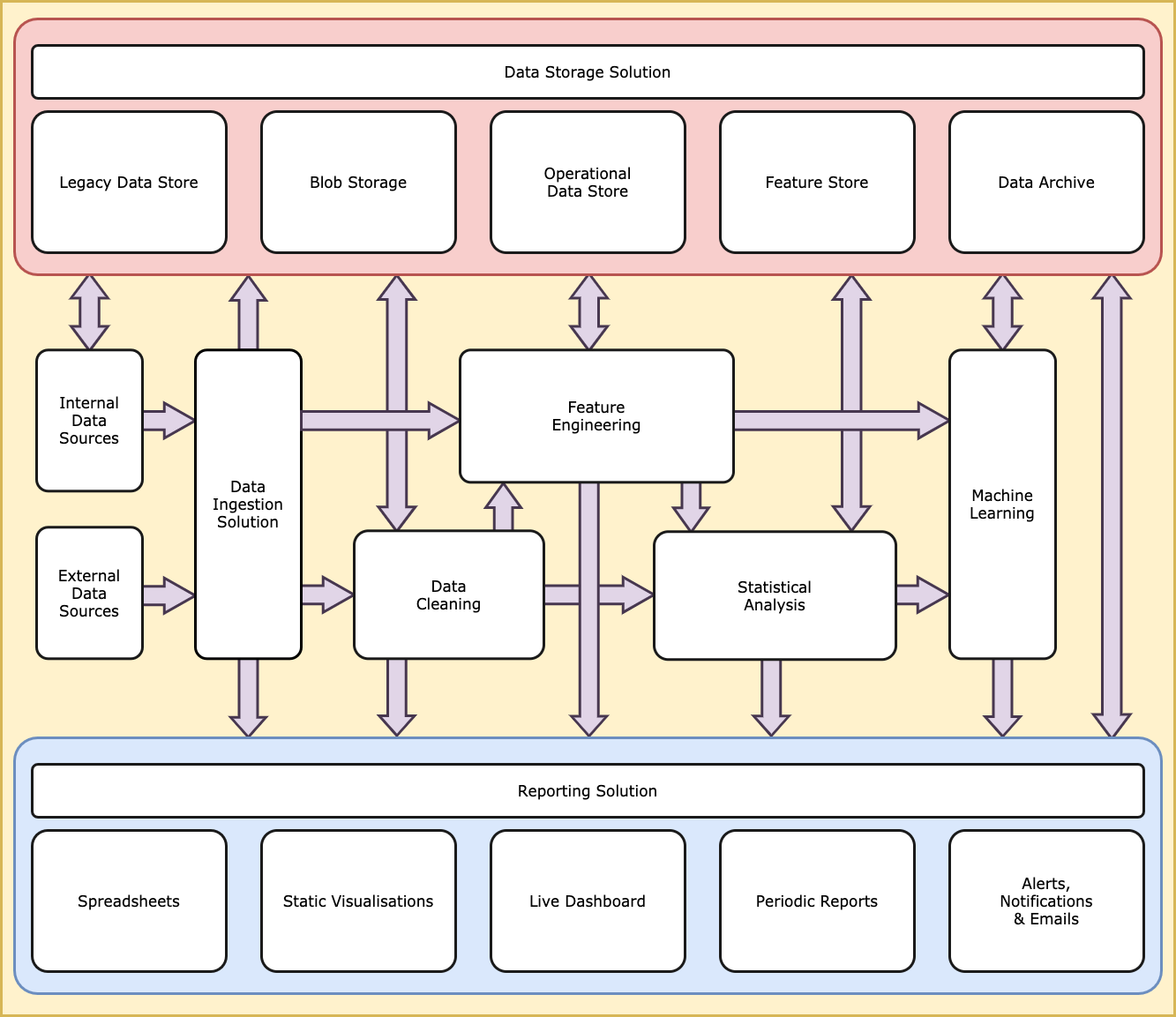

Keeping all of this in mind, here is how I’d visualise a basic analytics workflow.

Don’t let that image frighten you. It’s only meant to give you an idea of how data flows in a data analytics system. An efficient analytics workflow automates data movement from one subprocess to another, and minimises human intervention, allowing analysts and business stakeholders to focus their attention on high-priority issues. It also helps individuals and teams make better decisions by shifting their focus from scouring crude data to analysing reports produced by the system.

Get in touch

At Exposé we pride ourselves in developing bespoke data analytics solutions that cater specifically to your business’ requirements. Whether you are looking to integrate reporting tools, automate your data workflows, or modernise your existing tech infrastructure by moving to the cloud, we can provide you the service you require. Get in touch with us for a confidential initial discussion.