Author: Etienne Oosthuysen

Search for info on Azure Databricks and you’ll likely hear it described along the lines of “a managed Apache Spark platform that brings together data science, data engineering, and data analysis on the Azure platform”. The finer nuances and, importantly, information about the business benefits of this platform can be trickier to come by. This is where our ‘cheat sheet’ comes in. This is the first of a series designed to assist you in deciphering this potentially complicated platform. Feel free to also read the second article in the series, distilling information from data, hereafter.

What is it?

Databricks is a managed platform in Azure for running Apache Spark. Apache Spark, for those wondering, is a distributed, general-purpose, cluster-computing framework. It provides in-memory data processing capabilities and development APIs that allow data workers to execute streaming, machine learning or SQL workloads—tasks requiring fast, iterative access to datasets.

There are three common data worker personas: the Data Scientist, the Data Engineer, and the Data Analyst. Through Databricks, they’re able to collaborate on big data projects and acquire, engineer and analyse data, wherever it exists, in parallel. The bigger picture is that they are therefore all able to contribute to a final solution which is then brought to production.

- Databricks is not a single technology but rather a platform that can, thanks to all its moving parts, personas, languages, etc., appear quite daunting. With the aim of simplifying things, our cheat sheet starts with a high-level snapshot of the workloads performed on Databricks by our Data Scientist, Data Engineer and Data Analyst personas.

- We’ll then look at some real business benefits and why we think businesses should be paying attention. Lastly, we’ll delve into two related workloads:

- Data transformation, and

- Queries for visual analysis.

Our subsequent cheat sheets will start to unpick the remaining workloads.

The image below shows a high-level snapshot of the workloads performed by our three data worker personas. The workloads in the coloured sections form (to varying degrees) the basis for the contents of our cheat sheet.

- Data engineering forms, in our opinion, the largest of the cohort of workloads:

- Data acquisition – i.e. how data is acquired for transformation, data analysis and data science using Databricks. This could potentially fall beyond the realms of Databricks due to the fact that data can be leveraged from wherever it exists (for example Azure Blob or Azure Data Lake stores, Amazon S3, etc.) and data may already be hosted in those stores as a result of some preceding ETL process. Databricks can of course also acquire data.

- Data transformation – discussed later in this article, focussing on the ETL processes within Databricks (ETL within).

- Data analysis takes on two flavours:

- Queries – these could overlap heavily with the world of the Data Scientist, especially if the languages used are Python or R and if the intent is machine learning and predictive analytics. But Data Analysts could, of course, also perform queries for ‘on the fly’ data analysis.

- Queries for visual analysis – queries are also performed to ready data for visual analysis. This is discussed later in this article; however, it must be noted that the lines between this kind of Queries and Data Transformation performed by the Data Engineer can become very blurred. This in itself proves the collaborative and parallel nature Databricks allows.

- Data science has machine learning and associated algorithms, with predictive and explanatory analytics as the end goal. Here too, queries are performed, and the lines are similarly blurred with the queries performed by the Data Analyst and the Data Engineer.

- Underpinning all of this are the workloads involved in moving the solutions to production states.

These workloads are logical groupings only aimed at clearing what could otherwise be muddy waters to the untrained eye. Queries may, for example, be performed, then used for transformations, data science and visual analysis.

So, without any further ado, let’s look at why businesses should be watching Databricks very closely!

Why Databricks? – Beyond the guff, business benefits and importantly, why businesses should care

If you search on Google for ‘Apache Spark’ you’ll find loads of buzzwords – “open-source”, “distributed”, “big data”, etc. On first glance, this can look like marketing babble and appear completely removed from a business’s actual data challenges. So let’s dispense with the buzzwords and focus on the business challenges.

Note also: although Apache Spark (and therefore Databricks too) is positioned in the big data camp, its application is not limited to big data workloads. So, if some of the challenges we list below apply to your data landscape (big data or not), read on.

Time to market

Challenge – data warehouses takes too long to deliver business benefit

Benefit – Databricks is naturally geared towards agility via its ability to serve parallel collaboration, which, in turn, leads to improved responsiveness to change. This means that the time it takes to deliver data workloads is reduced

Parallel collaboration rather than seriality

Challenge – participants in the data solutions processes are too dependent on each other to complete their tasks before they can participate. These challenges are a result of serial workloads

Benefit – parallel collaboration delivers maximum agility. It means that the three main data personas, i.e. the Engineer, the Scientist and the Analyst can collaborate on delivering the data elements that will form part of a final data deliverable in parallel. As the Engineer acquires the data, the Analyst, the Scientist and indeed the Engineer start contributing to the logic that transforms and manipulates the data all in parallel. This, in turn, contributes to a reduction in time for solutions to get to market

Responsiveness and nimbleness

Challenge – companies change, requirements change, and business may not know exactly what they want or need from data that is stored in a variety of formats in different locations

Benefit – companies frequently generate thousands of data files, hosted in diverse formats including CSV, JSON, and XML from which analysts need to extract insights

The classic approach to querying this data is to load it into a central data warehouse. But this involves the design and development of databases and ETL. This works well but requires a great deal of upfront effort, and the data warehouse can only host data that fits the designed schema. This is costly, time-consuming and difficult to change.

With the data warehouse approach, insights can only be extracted after the data is transformed upon load.

Databricks presents a different approach and allows insights to be extracted and transformed upon query from vast amounts of data stored cheaply in its native format (such as XML, JSON, CSV, Parquet, and even relational database and live transactional data) in Blob Stores. With Databricks, data is read directly from the raw files, and by using SQL queries, data is cleansed, joined and aggregated – hence the term transform upon query.

Transforming the data each time a query run means this approach is much more geared towards quick turnaround and becomes more responsive to change. BUT, it requires superior performance.

Performance

Challenge – workloads (such as queries) serving analytics and data science, are run often and transform the data each time the query runs (transform upon query). Logic dictates that this will not perform as well as data transformed upon load once and the transformed data materialised for reuse.

Benefit – Databricks provides a performant environment that handles the transform upon query paradigm. This is done by utilising a variety of mechanisms, such as:

- Databricks includes a Spark engine that is faster and performs better through various optimisations at the I/O layer and processing layer:

- For example, Spark clusters are configured to support many concurrent queries and can be scaled to handle increased demand.

- It includes high-speed connectors to Azure storage (i.e. Azure Blob and Azure Data Lake stores)

- It uses the latest generation of Azure hardware (Dv3 VMs), with NvMe SSDs capable of even faster I/O performance.

A managed big data (or in our opinion, all data) platform

Challenge – The data landscape is becoming increasingly complex and fragmented and costly to maintain.

Benefit – “Databricks is a managed platform (in Azure) for running Apache Spark – that means that you neither have to learn complex cluster management concepts nor perform tedious maintenance tasks to take advantage of Spark. Databricks also provides a host of features to help its users to be more productive with Spark. It’s a point and click platform for those that prefer a user interface, such as data scientists or data analysts.” – https://docs.databricks.com/_static/notebooks/gentle-introduction-to-apache-spark.html

Not just Azure Blob Storage – access data where it lives

Challenge – Data is not necessarily stored in Azure Blobs

Benefit – Databricks connections are not limited to Azure Blob or Azure Data Lake stores, but also to Amazon S3 and other data stores such as Postgres, HIVE and MY SQL, Azure SQL Database, Azure Event Hubs, etc. via JDBC (Java Database Connectivity). So, you can immediately start to benefit from the cost, flexibility and performance benefits offered by Databricks for your existing data

Cost of the cluster

Challenge – Big data solutions tend to cost a lot of money

Benefit – The Databricks File System (DBFS), is a layer over your data (where it lives) that allows you to mount the data, making it available to other users in your workspace and persisting the data after a cluster is shut down. Data is not synced, but mounted, which means you do not double pay for storage.

When a Databricks cluster is shut down (which is also done automatically at an interval you specify when not in use), it stops costing you money, so you only pay for what you use

Furthermore, Azure Databricks leverages the economies of scale provided by Azure. Analysis workloads (Interactive workloads for analysing data collaboratively with notebooks) on a Premium F4 instance (4 virtual CPU’s and 8 GB RAM) running 24 x 7 will, for example, only cost you $380 pm. And Data Engineering workloads (Automated workloads for running fast and robust jobs via API or UI) for the same tier will, for example, only cost you $307 pm.

*Note that the pricing above is in AUD and is an estimate only as per the Azure Pricing Calculator.

Australian region

Challenge –some big data solutions such as Azure Data Lake, first generation, is not available in the Australian region as at the date of first publication of this article

Benefit – Databricks can be provisioned in the following Australian regions:

- Australia Central

- Australia Central 2

- Australia East

- Australia South East

Like everything, there are some downsides/ realities to consider

SQL, R, Python, Scala – can be daunting

SQL has become the “lingua franca” for most Data Engineers and Data Analysts, whereas the same applies to R and Python for Data Scientists. These personas collaborate on Databricks using notebooks as interfaces to the data, which allows them to create runnable code, visualisations and narrative.

Suddenly these personas gain visibility over the code from other personas in the same notebook, and as notebooks can consist of multiple languages, this can seem quite daunting to personas unfamiliar with languages they have not previously used, especially considering that the languages used in Databricks, i.e. R, Python, Scala and SQL, each have their peculiarities.

Obviously this is only an issue if you are unfamiliar with such an environment. For those with good coverage of SQL, R, Python and Scala, this is a benefit as they can work with multiple languages in the same Databricks notebook easily, i.e. personas can use their preferred language of choice irrespective of the choice of other personas. All that needs to be done is to prepend the cell with the appropriate magic command, such as %python, %r, %sql, etc.

From another viewpoint however, this diversity of languages can be a strength for the right business environment: the workflow naturally dissipates technical debt and encourages capability sharing.

Learning curve

There will often be a requirement for personas to become more familiar with a broader set of languages and the notebook environment to make following what is happening in the total notebook easier. This will make for easier collaboration and is inline with a move from pure serial to more parallel workloads.

Case study – Data Transformation and Visual Analysis

The use case described in this section is used as a vehicle for a more technical deep dive into the workloads shown in the coloured sections of the Databricks Workflow image above (i.e. Data Transformations and ETL within Databricks, and Queries for visual analysis).

Our use case – IoT and wearable devices, such as Apple Watches, are currently under a substantial spotlight as there is a lot of interest as to what can be gleaned from the data they produce. In our use case, Apple Watch data is brought into Azure from where the datasets will be mounted to Databricks, ETL processes then transforms and loads the data, and finally Queries are performed.

An Apple Watch is used to generate data we will use in this user story. An app on the watch integrates with Azure and streams some data into Azure Blob Storage (this app and stream are not within the scope of this article as Data Acquisition will be discussed in a subsequent article).

The data manifests itself as CSV files in Azure Blob store > Container:

Data Engineering > Data Transformation > ETL within

This section assumes that data is already available in an appropriate store for mounting (in this case Azure Blob store). We notionally call the next steps “ETL within Databricks” as it represents a logical ETL that will extract and validate the data, apply a schema, then load the data ready for use by (for example for analytical querying). ETL within Databricks should not be confused with ETL to get data into Azure in the first place (which will be discussed in a subsequent article).

ETL within Databricks is conceptually the same as the ETL concepts we know from conventional BI workloads, in that you first extract the data, then transform it, and then load it, but it is done in a much nimbler fashion and it adheres to the notion of the transformation of data upon query, rather than upon load.

The common steps associated with our two workloads, i.e. ETL within and queries to ready the data for visual analysis are shown visually in the image below:

Remember that Spark is the engine used by Databricks, and SQL/ Scala/ Python/ R/ Java uses that engine to perform the various workload tasks.

In the sections below, we will first mount our Apple watch data (this is the extract step), we will then transform the data and load it into a table using SQL (the amber route shown above), create a data frame and load it as a parquet file (the green route above). Later we will deal with Analysis of the loaded data, readying the data for, for example, visual analysis. For now let’s focus on the ETL.

The queries shown in each step below are examples of what could be done and should give the reader a starting point from where to build more complicated ETL within Databricks and subsequent queries. Databricks is a massively flexible platform, so the sample queries may be made much more complex or approached in an entirely different way.

Extract

In the first step we mount the data held in our Azure Blob store to the Databricks File System (DBFS). This represents the “Mounted Stores in DBFS” step in the image above (we are not focussing on the JDBC step in this use case).

We first generated a SAS URL for the Azure Blob store to use as a variable, then used it in the query.

Mounting means creating a pointer to the store, which means that the data never actually syncs. The mount point is simply a path representing where the Blob Storage container or a folder inside the container is mounted in DBFS.

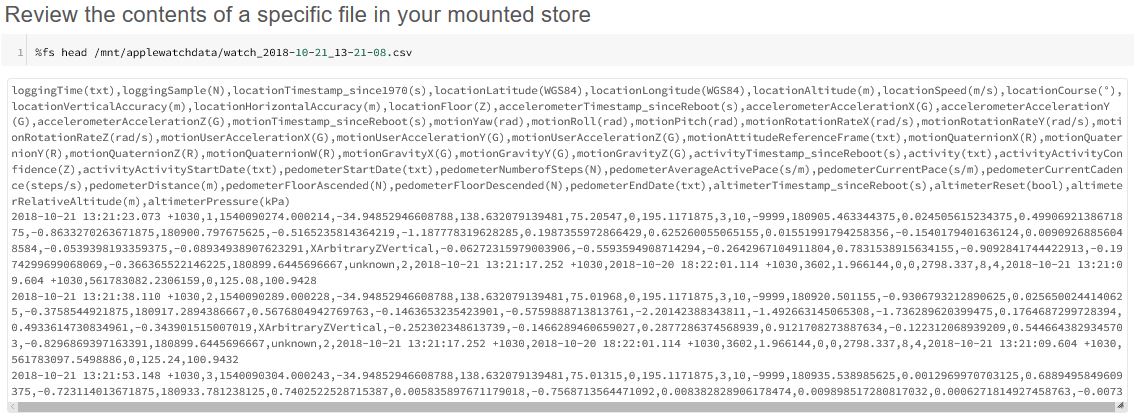

Optional – We may quickly validate the mount by running the following query to see the contents of the mount point.

Optional – We lastly validate the data in any of the files within our mount by looking at the content of any of the files within our mount point.

Transform

As per the Transform steps, there are two options: a SQL path (shown in Amber) and a Scala/ Python/ R/ Java path (shown in Green). The reader can jump to the Scala/ Python/ R/ Java path if wanting to bypass the SQL sections, which to many may seem a bit familiar.

Transform and Load using SQL (Option A)

We use SQL to create a table in DBFS which will “host the data” via metadata, then infer the schema from the files in our Azure Blob store container. Note that the scheme can be explicit rather than inferred. In our use case all our files have the same structure and the schema can therefore be inferred. But in cases where structures differ, then standardisation queries will precede this step.

It is worth noting that in Databricks a table is a collection of structured data. Tables in Databricks are equivalent to Data Frames in Apache Spark.

Optional – We can now perform all manner of familiar SQL queries. It is also worth noting that data can be visualised on the fly using the options in the bottom left corner. In the first example, we review the data we had just loaded, in the second we do a simple record count.

Transform and Load using Scala (Option B)

Tables are familiar to any conventional database operator. Let’s now extend this concept to include Data Frames. A Data Frame is essentially the core Transformation layer in this alternative ETL path – it is a dataset organised into named columns. It is conceptually equivalent to a table in a relational database but with richer optimisations under the hood. Data Frame code follows a “spark.read.option” pattern.

In the next query, we read the data from the mount, we infer the headers (we know that all our files have the same format so no preceding column standardisation is required), we select only certain columns of value to us, and we transform the column names as a subsequent step, as loading the data to Parquet restricts us from using “restricted characters” such as “(” and “,” .

We lastly load the data into a parquet file in DBFS. Whilst blob stores like AWS S3 and Azure Blob are the data storage options of choice for Databricks, Parquet is the storage format of choice. They are highly efficient, column-oriented data format files that show massive performance increases over other options such as CSV. For example Parquet compresses data repeated in a given column and preserves the schema from a write.

Queries for Visual Analysis

Once we have Extracted, Transformed and Loaded the data we can now perform any manner of query-based analysis. We can for example query the Parquet file directly, or we can create a table from the Parquet file and then query that, or we can bake the final query into the Table create.

Let’s first query the Parquet directly:

Now let’s create a table from its Metadata which can then be used by BI tools such as Power BI.

In the final query, we query the table and prepare the data for visual analysis in something like Power BI. We select the maximum number of steps our Apple Watch wearer by day (we only loaded two days’ worth of data).

We will, in subsequent articles introduce many of the other workloads associated with Databricks building on the concepts we used in this article.

Author: Etienne Oosthuysen; Contributor: Rajesh Kotian