Author: Etienne Oosthuysen

The Microsoft technology stack, specifically Azure, has over the past few years provided many best-of-breeds solutions to common data problems and tackled the data analytics domain with a different approach to its competitors (AWS and GCP). And recent changes saw them consolidate and tightly couple some of the key data resources which makes for an even more compelling story.

This is something that Exposé have been evangelising out there in a market for quite some time now, and even though we had access to various product roadmaps and were therefore fortunate enough to architect solutions for our customers with a future state in mind, it was great to see some of these roadmap areas reaffirmed and in action at Ignite 2021.

So, looking through the lens of data analytics professionals, here are our six most important takeaways from Ignite 2021. We state in each section why data driven organisations should be interested.

- Reinventing of Data Governance – Azure Purview.

- Ensuring performance of your new modern data warehouse – Power BI and Azure Synapse Analytics.

- Bringing BING to data analytics – Azure Cognitive Search (Semantic Search).

- Bringing premium capabilities to users – Power BI Premium Gen2.

- Reining in the uncontrolled release of Excel through Power BI Connectivity – Power BI.

- Add cores to boost your BI performance – Power BI Premium Gen2.

Exposé will also delve into some of these areas in more detail in subsequent articles.

Contributors: Andrew Exley and Etienne Oosthuysen

Data governance across your digital landscape

Technology – Azure Purview

Reinventing Data Governance.

By Etienne

Why – As we acquire and store increasing volumes of data, greater numbers of data workers will use more data to answer increasingly varied business questions at scale and within a quick turnaround. And each data activity has the potential to contribute to the collective knowledge across the business. So, with the explosion of raw and enhanced data, governing data is more important than ever. But it must be done in a pragmatic way as we no longer have the luxury of old-school data workloads such as conventional data warehousing which ensured data was as-perfect-as-it-could-be, and every single transformation was designed, understood, and documented. Those old-school workloads simply cannot deal with modern data realities and the speed with which data solutions must be stood up. This means that the governance of data also had to be reinvented to keep up.

Summary – Azure Purview allows for management of all the data in your data estate (on-premises, Azure Data Lake Gen 2, Azure SQL Database, Cosmos DB, and even AWS and GCP) through a single pane of glass where all infrastructure and services required are provisioned as single PaaS platform and user experience.

Core to Purview is an active (metadata) Data Map, which records where all the data assets are automatically via built in scans and classification.

Take away – for on-premises data, Purview allows for the hosting of scanning and classification services on-premises so that it all happens behind your own firewall.

Data Map then drives Data Catalog which supports self-service data discovery, and,

Data Insights which provides a bird’s eye view of where data assets are and what kind of data assets are present.

Take away – it is not only stored data that forms part of the objects that can be included in the Data Map, but also other workloads such: Azure Data Factory (this means data lineage is automatically populated from origination through to consumption (e.g., Power BI)), and Power BI reports and datasets (this means consumption objects themselves can form part of the discoverable metadata, cataloguing and insights).

Even deeper integration between Azure Synapse Analytics and Power BI for superior performance

Technology – Azure Synapse Analytics and Power BI

Ensuring performance of your new modern data warehouse.

By Etienne

Why – Reporting over big data exposed through a modern data warehouse is no longer some obscure unique requirement, as we see the requirement for this more and more with our customers, especially as data volumes explode and visualisations are needed over business logic already created in the modern data warehouse fast.

Summary – these requirements are what the modern data warehouse (as a service – such as Synapse in Azure, Redshift in AWS, or other services such as Databricks or Snowflake) are meant to overcome. But performance, even though already hugely improved, still remained elusive in some cases when data ingestion, through data warehouse, and into data visualisation are viewed as a comprehensive data pipeline. But now, the integration between Synapse and Power BI includes adaptive learning.

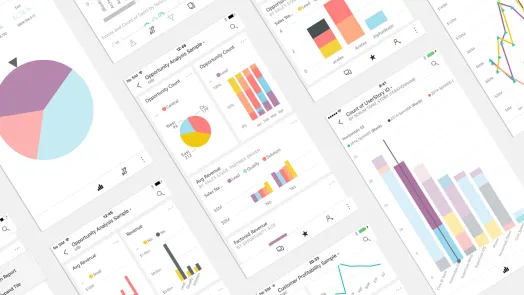

Take away – Power BI collects query patterns and determines materialised views for optimal performance and shares them with Synapse. Synapse creates the materialised views and directs Power BI queries to run over the views which delivers significant performance advantages. See below how the query performance improved with almost 500% between the original query shown in red, and the query after adaptive learning shown in blue.

In addition, Power BI, Synapse and Azure Log Analytics also now provide the ability to correlate queries so that performance bottlenecks can be identified.

Take away – It is now possible to monitor the integrated Power BI and Synapse ecosystem via Azure Log Analytics both at the tenancy level and at the workspace level. Both Power BI and Synapse sends all the performance telemetry to the Log Analytics workspace. In the past, these queries had to be analysed independently, but now, the Power BI queries, and the Synapse queries are correlated so that performance analysis can be performed on the end-to-end pipeline (covering DAX, M-query and SQL) so that it becomes easy to determine any bottlenecks.

Discovery of data content improved with Semantic Search and its role within data analytics

Technology – Azure Cognitive Search (Semantic Search)

Bringing BING to data analytics.

By Etienne

Why – Azure Cognitive Services, already widely used, used key word search in its Cognitive Search service. This was not as accurate as the algorithms used by, for example BING.

Leveraging the same capabilities which are used in BING and achieving the same search engine kind of outcome but over your data. This is one of the most exciting new innovations to Azure Cognitive Services in a while. Azure Semantic Search now better understands what users are looking for and achieves better search accuracy compared to the older key word search methods.

Take away – A data worker can quickly enable OCT, Text Analytics and Object detection without the need for ML coding including over data in your Azure Data Lake Gen 2.

Summary – Data ingestion allows data to be pulled into, or push data into the search index using the push API. Content can include non-uniform data, information in files, even images. Index appropriate information is then extracted and pre-built ML algorithms are invoked to understand latent structure in the data. Semantic relevance, captions, and answers are then added as part of Semantic Search.

With normal key word search, the ambiguity of the word “Capital” creates a clear issue when one looks at the search term. I.e., capital gains tax in France has no relevance to the original search term.

Take away –Semantic Search includes learned ranking models which provides associations in a much more accurate way compared to key word search.

When Semantic Search is enabled, the learned ranking models provides for a much more accurate search results as seen on the right below.

Take away – Captions are also shown (in bold) highlighting the context of our search, and even a suggested answer. Similar to a conventional search engine.

Take away – this seems far removed from data analytics, but it is not, it is in fact increasingly relevant. Baking this kind of intelligent search over your data into your broader data analytic ecosystem alongside your reporting solutions is important when one considers data workers no longer consume data via reporting solutions only. Microsoft are already doing some of this via Power BI’s Q&A (natural language queries), but with Semantic Search, this is put on steroids.

Premium Per User License for Power BI

Technology – Power BI Premium

Bringing premium capabilities to users.

By Andrew

Why – Over the past few years Microsoft has been adding features to Power BI that were enabling data analysts to work with data in a way that was normally limited to complex systems managed by data engineers. These capabilities have allowed organisations to accelerate their time to value by enabling these controls to be simplified and pushed further into the business. Unfortunately, a lot of these features had been out of reach of most organisations due to a cost barrier and, until now, there was no alternative method to enable these empowering features for other Power BI clients. From 2 April 2021, Microsoft will be launching the Premium Per User license at USD$20 per user per month which will enable organisations to provide and pay for these features on a per individual basis.

Summary – Microsoft enables features that are usually limited to high-cost analytics systems to organisations at a price point that is based on a per user model.

Take away – Utilising Premium capabilities opens further options on how an organisation can utilise its data to achieve on business outcomes. Organisations now have access to features such as:

– Increased model size limits up to 100GB

– Refreshes up to 48 per day (currently unlimited if done via API)

– Paginated reports

– Enhanced Dataflow features

– XMLA endpoint connectivity

Excel Online connected to a Power BI Dataset

Technology – Power BI

Rein in the uncontrolled release of Excel from Power BI Connectivity.

By Andrew

Why – Power BI is growing to become the default service for gathering and transforming organisational data for presentation. Microsoft is making it capable for Excel Online to be connected directly to a Power BI Dataset. The benefit to this is to ensure a single point of truth of data is used consistently throughout the organisation and by utilising it with the connected version of Excel, it will allow people comfortable with using Excel for analysis to engage with organisationally curated data.

Summary – Excel Online is now able to integrate with organisation Power BI Datasets.

Take away – By utilising Excel Online with Power BI datasets it will help organisations rein in the uncontrolled release of Excel documents and enable the use of organisation controlled single sources of truth for their data.

Auto-scaling for Gen2 Premium

Technology – Power BI

Add cores to boost your performance.

By Andrew

Why – Previously when buying Power BI Premium, organisations bought based on an expected requirement of compute capacity. This lead them to making a binary choice, purchase based on a cost efficient model of buying for normal expected usage or over spend to cater for known spikes. With this new announcement, Microsoft has removed that choice requirement. Organisations can now allow extra cores to be added to boost the compute capacity when needed by autoscaling on demand. The cores will be added automatically when the demand threshold requires it. The extra cores are made available for 24 hours after initial start to the capacity and are billed through the organisation’s Azure billing.

Summary – Microsoft enables organisations to control their spending while meeting user demands for fast and efficient reporting.

Take away – By allowing additional cores to come online when required, organisations can ensure effective performance for their report requirements. The price Microsoft has released for this is USD$85 per vCore per 24 hours. Organisations can control how many cores are to be made available to a capacity. The use of these extra cores is meant to cater to spikes in requirements and shouldn’t follow a daily usage pattern. If an organisation finds that the auto-scaling is being consumed on a regular basis, it’s recommended to look at the models being used within that capacity and look for ways of improving their efficiency.

Please look out for detailed articles on the topics described here in the near future.